The differences between OrioleDB and Neon

In a recent Hacker News discussion, there was some confusion about the differences between OrioleDB and Neon. Both look alike at first glance. Both promise a "next‑gen Postgres". Both have support for cloud‑native storage.

This post explains how the two projects differ in practice. And importantly, OrioleDB is more than an undo log for PostgreSQL.

The Core Differences

OrioleDB

OrioleDB is a Postgres extension. It implements a Table Access Method to replace the default storage method (Heap), providing the following key features:

- MVCC based on UNDO, which prevents bloat as much as possible;

- IO-friendly copy‑on‑write checkpoints with very compact row‑level WAL;

- An effective shared memory caching layer based on squizzled pointers.

Neon

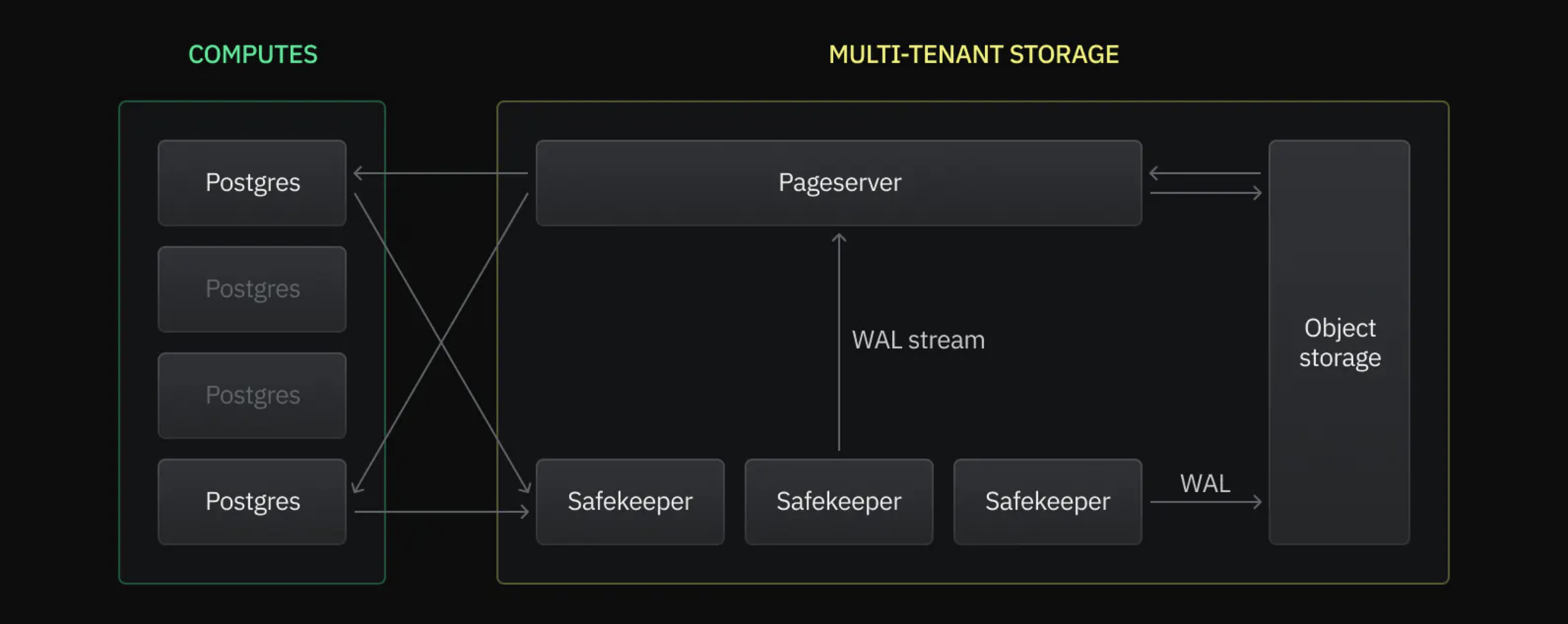

Neon uses the default Table Access Method (Heap) and replaces the storage layer. The WAL is written to the safekeepers, and blocks are read from page servers backed by object storage, providing instant branching and scale-to-zero capabilities.

Architecture comparison

CPU Scalability

OrioleDB eliminates scalability bottlenecks in the PostgreSQL buffer manager and WAL writer by introducing a new shared memory caching layer based on squizzled pointers and row-level write-ahead logging (WAL). Therefore, OrioleDB can handle a high-intensity read-write workload on large virtual machines.

Neon compute nodes have roughly the same scalability as stock PostgreSQL. Still, Neon allows for the instant addition of more read-only compute nodes connected to the same multi-tenant storage.

IO Scalability

OrioleDB implements copy-on-write checkpoints, which improve locality of writes. Also, OrioleDB’s row-level WAL saves write IOPS due to its smaller volume.

Neon implements a distributed network storage layer that can potentially scale to infinity. The drawback is network latency, which could be very significant in contrast with fast local NVMe.

VACUUM & Data bloat

OrioleDB implements block-level and row-level UNDO logs, eliminating the need for routine VACUUM operations. UNDO logs, in combination with the automatic merging of sparse pages, minimize the risk of bloating.

Neon utilizes stock PostgreSQL VACUUM on the primary compute node. The scalability of multi-tenant storage mitigates the disadvantages of VACUUM and the risk of bloat.

Separation of Storage and Compute

Neon

Neon was designed around a hard split from day one. A stateless (besides node’s shared memory) compute node streams WAL to a quorum of Safekeepers (a replicated log service) and serves reads by pulling 8KB pages from Page servers. Page servers keep hot blocks on SSD but push cold layers to S3‑compatible object storage. Because compute has no durable state, it can be started, paused, cloned, or discarded in seconds, enabling features such as instant branching, scale‑to‑zero, and one‑click read replicas. The storage tier is multi‑tenant and replicates data across multiple availability zones (AZs).

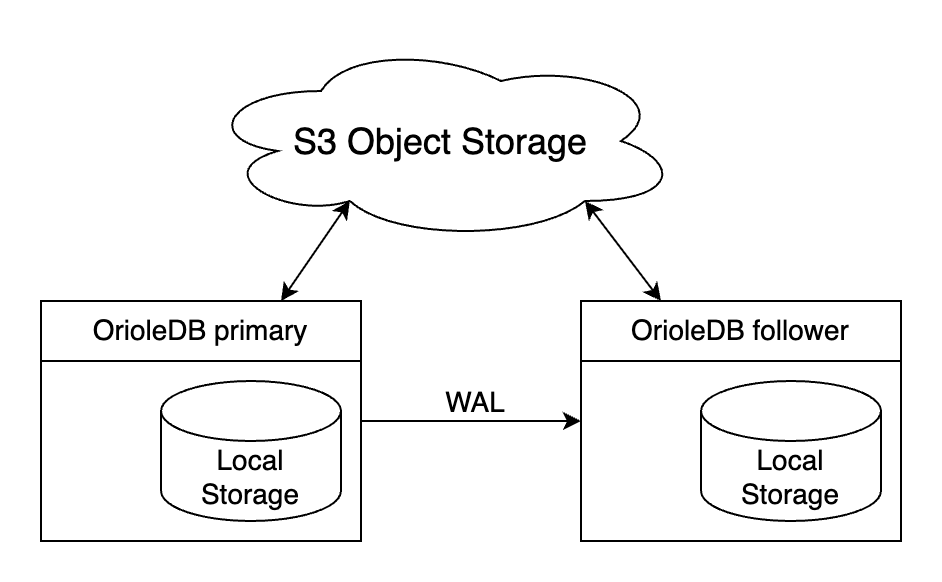

OrioleDB can also leverage distributed S3 object storage. In the experimental mode, OrioleDB can automatically evict cold data to S3, synchronize the hot storage with S3 on checkpoint, and archive WAL files to S3. In this mode, local storage acts as a cache for the data stored in S3. In this mode, multiple replicas can connect to the same S3 storage (development is in progress), but their local storage is still necessary. Similarly to Neon, OrioleDB in S3 mode can seamlessly scale to zero when the database has no load.

| OrioleDB | Neon | |

|---|---|---|

| Cold data | Must fit S3 | Must fit S3 |

| Hot data | Must fit local storage with low latency | Must fit page servers, network latency for access |

| Scale down to zero | Yes | Yes |

Production Readiness

OrioleDB

- Current status — Public beta10; still not recommended for production usage*.*

- Upstream patches — Requires a patched PostgreSQL 17 build; core patches are under review but not yet merged.

- Support & SLA — Community support on GitHub. Supabase offers OrioleDB in experimental projects.

Neon

- Current status — Generally Available since August 2024 on AWS, and GA on Azure (native integration) since May 14 2025.

- PostgreSQL patches — Neon's compute nodes also run a patched PostgreSQL (enhanced WAL-streaming protocol, remote storage hooks, tuned fsync settings). The patchset is maintained by Neon and rebased onto each major PostgreSQL release while upstream discussions continue.

- Support & SLA — 24×7 support on Business/Enterprise tiers with a 99.95% availability SLA; public status page and multi‑region deployments.

Conclusion

Flexibility and extensibility has always been a strength of a PostgreSQL. OrioleDB and Neon push these traits in different directions.

- OrioleDB is the choice when single‑node raw throughput, predictable latency, and freedom from VACUUM and bloat matter most. The UNDO‑based MVCC, copy‑on‑write checkpoints, and squizzled‑pointer cache squeeze every last IOP and CPU cycle out of modern hardware. The experimental S3 mode extends those benefits with cheaper storage tiers, without giving up local‑disk speed for hot data.

- Neon shines when you want hands‑off operations, instant branching, and elastic compute. The remote‑first architecture cuts the cord between storage and CPU, letting you spin new read replicas or hibernate workloads down to zero.

Both projects move fast. Watch for OrioleDB’s S3 mode to hit GA and for self‑hosted Neon clusters to mature. The Postgres you deploy in 2026 may look very different from the one you know today.